Getty Photographs

Getty PhotographsApple has suspended a brand new synthetic intelligence (AI) function that drew criticism and complaints for making repeated errors in its summaries of stories headlines.

The tech big had been going through mounting strain to withdraw the service, which despatched notifications that appeared to return from inside information organisations’ apps.

“We’re engaged on enhancements and can make them obtainable in a future software program replace,” an Apple spokesperson stated.

Journalism physique Reporters With out Borders (RSF) stated it confirmed the hazards of speeding out new options.

“Innovation must not ever come on the expense of the precise of residents to obtain dependable info,” it stated in a press release.

“This function shouldn’t be rolled out once more till there may be zero danger it is going to publish inaccurate headlines,” RSF’s Vincent Berthier added.

False experiences

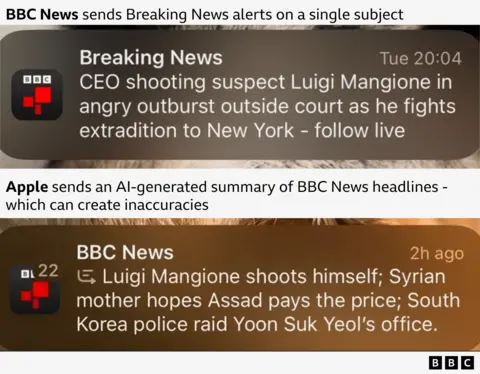

The BBC was among the many teams to complain concerning the function, after an alert generated by Apple’s AI falsely told some readers that Luigi Mangione, the person accused of killing UnitedHealthcare CEO Brian Thompson, had shot himself.

The function had additionally inaccurately summarised headlines from Sky News, the New York Instances and the Washington Publish, in line with experiences from journalists and others on social media.

“There’s a large crucial [for tech firms] to be the primary one to launch new options,” stated Jonathan Vibrant, head of AI for public providers on the Alan Turing Institute.

Hallucinations – the place an AI mannequin makes issues up – are a “actual concern,” he added, “and as but corporations do not have a means of systematically guaranteeing that AI fashions won’t ever hallucinate, aside from human oversight.

“In addition to misinforming the general public, such hallucinations have the potential to additional harm belief within the information media,” he stated.

Media retailers and press teams had pushed the company to pull back, warning that the function was not prepared and that AI-generated errors had been including to problems with misinformation and falling belief in information.

The BBC complained to Apple in December nevertheless it didn’t reply till January when it promised a software program replace that may make clear the function of AI in creating the summaries, which had been elective and solely obtainable to readers with the most recent iPhones.

That prompted a further wave of criticism that the tech big was not going far sufficient.

Apple has now determined to disable the function solely for information and leisure apps.

“With the most recent beta software program releases of iOS 18.3, iPadOS 18.3, and macOS Sequoia 15.3, Notification summaries for the Information & Leisure class shall be briefly unavailable,” an Apple spokesperson stated.

The corporate stated that for different apps the AI-generated summaries of app alerts will seem utilizing italicised textual content.

“We’re happy that Apple has listened to our issues and is pausing the summarisation function for information,” a BBC spokesperson stated.

“We sit up for working with them constructively on subsequent steps. Our precedence is the accuracy of the information we ship to audiences which is crucial to constructing and sustaining belief.”

Evaluation: A uncommon U-turn from Apple

Apple is usually strong about its merchandise and does not usually even reply to criticism.

This easy assertion from the tech big speaks volumes about simply how damaging the errors made by its much-hyped new AI function really are.

Not solely was it inadvertently spreading misinformation by producing inaccurate summaries of stories tales, it was additionally harming the status of stories organisations just like the BBC whose lifeblood is their trustworthiness, by displaying the false headlines subsequent to their logos.

Not a fantastic search for a newly-launched service.

AI builders have at all times stated that the tech tends to “hallucinate” (make issues up) and AI chatbots all carry disclaimers saying the data they supply ought to be double-checked.

However more and more AI-generated content material is given prominence – together with offering summaries at the top of search engines – and that in itself implies that it’s dependable.

Even Apple, with all of the monetary and knowledgeable firepower it has to throw at growing the tech, has now proved very publicly that this isn’t but the case.

It is also attention-grabbing that the most recent error, which preceded Apple’s change of plan, was an AI abstract of content from the Washington Post, as reported by their know-how columnist Geoffrey A Fowler.

The information outlet is owned by somebody Apple boss Tim Prepare dinner is aware of properly – Jeff Bezos, the founding father of Amazon.